DeepLearningMusicGeneration

DEEP LEARNING FOR MUSIC GENERATION

This repository is maintained by Carlos Hernández-Oliván(carloshero@unizar.es) and it presents the State of the Art of Music Generation. Most of these references (previous to 2022) are included in the review paper “Music Composition with Deep Learning: A Review”. The authors of the paper want to thank Jürgen Schmidhuber for his suggestions.

Make a pull request if you want to contribute to this references list.

You can download a PDF version of this repo here: README.pdf

All the images belong to their corresponding authors.

Table of Contents

2. Algorithmic Composition

1992

HARMONET

Hild, H., Feulner, J., & Menzel, W. (1992). HARMONET: A neural net for harmonizing chorales in the style of JS Bach. In Advances in neural information processing systems (pp. 267-274). Paper

Books

-

Westergaard, P. (1959). Experimental Music. Composition with an Electronic Computer.

-

Todd, P. M. (1989). A connectionist approach to algorithmic composition. Computer Music Journal, 13(4), 27-43.

-

Cope, D. (2000). The algorithmic composer (Vol. 16). AR Editions, Inc..

-

Nierhaus, G. (2009). Algorithmic composition: paradigms of automated music generation. Springer Science & Business Media.

-

Müller, M. (2015). Fundamentals of music processing: Audio, analysis, algorithms, applications. Springer.

-

McLean, A., & Dean, R. T. (Eds.). (2018). The Oxford handbook of algorithmic music. Oxford University Press.

2. Neural Network Architectures

| NN Architecture | Year | Authors | Link to original paper | Slides |

|---|---|---|---|---|

| Long Short-Term Memory (LSTM) | 1997 | Sepp Hochreiter, Jürgen Schmidhuber | http://www.bioinf.jku.at/publications/older/2604.pdf | LSTM.pdf |

| Convolutional Neural Network (CNN) | 1998 | Yann LeCun, Léon Bottou, YoshuaBengio, Patrick Haffner | http://vision.stanford.edu/cs598_spring07/papers/Lecun98.pdf | |

| Variational Auto Encoder (VAE) | 2013 | Diederik P. Kingma, Max Welling | https://arxiv.org/pdf/1312.6114.pdf | |

| Generative Adversarial Networks (GAN) | 2014 | Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, Yoshua Bengio | https://arxiv.org/pdf/1406.2661.pdf | |

| Transformer | 2017 | Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, Illia Polosukhin | https://arxiv.org/pdf/1706.03762.pdf | |

| Diffusion Models | 2015 | Jascha Sohl-Dickstein, Eric A. Weiss, Niru Maheswaranathan, Surya Ganguli | https://arxiv.org/abs/1503.03585 |

3. Deep Learning Models for Music Generation

2023

RL-Chord

Ji, S., Yang, X., Luo, J., & Li, J. (2023). RL-Chord: CLSTM-Based Melody Harmonization Using Deep Reinforcement Learning. IEEE Transactions on Neural Networks and Learning Systems.

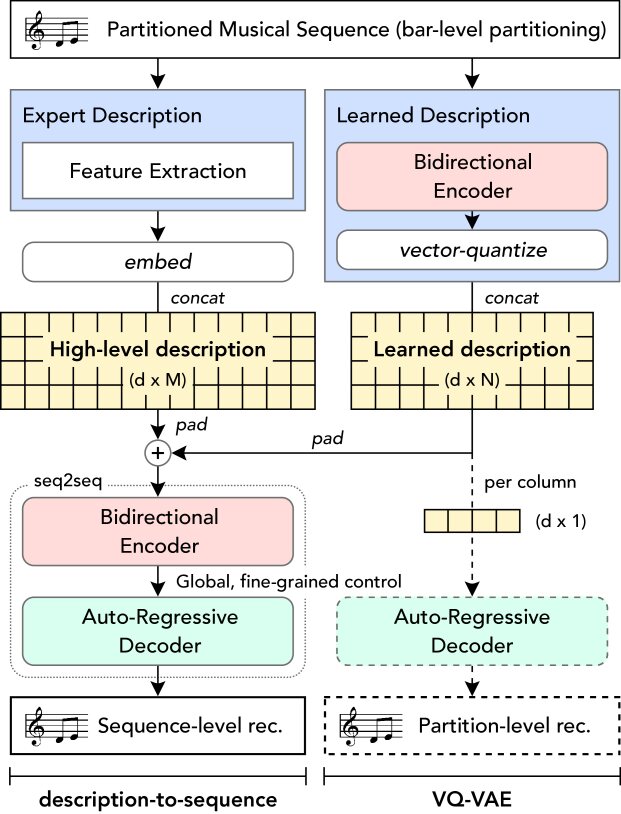

FIGARO: Generating Symbolic Music with Fine-Grained Artistic Control

von Rütte, D., Biggio, L., Kilcher, Y., & Hoffman, T. (2022). FIGARO: Generating Symbolic Music with Fine-Grained Artistic Control. Accepted ICLR 2023.

2022

Museformer

Yu, B., Lu, P., Wang, R., Hu, W., Tan, X., Ye, W., … & Liu, T. Y. (2022). Museformer: Transformer with Fine-and Coarse-Grained Attention for Music Generation. NIPS 2022.

Bar Transformer

Qin, Y., Xie, H., Ding, S., Tan, B., Li, Y., Zhao, B., & Ye, M. (2022). Bar transformer: a hierarchical model for learning long-term structure and generating impressive pop music. Applied Intelligence, 1-19.

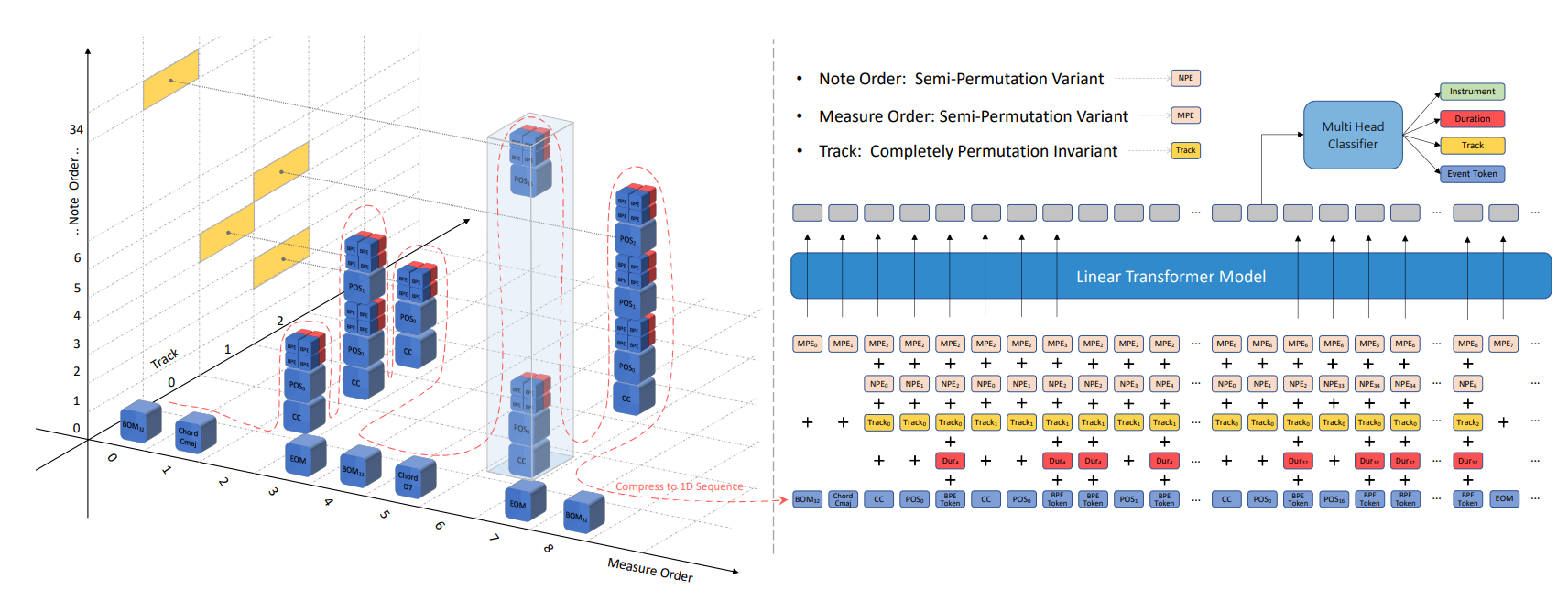

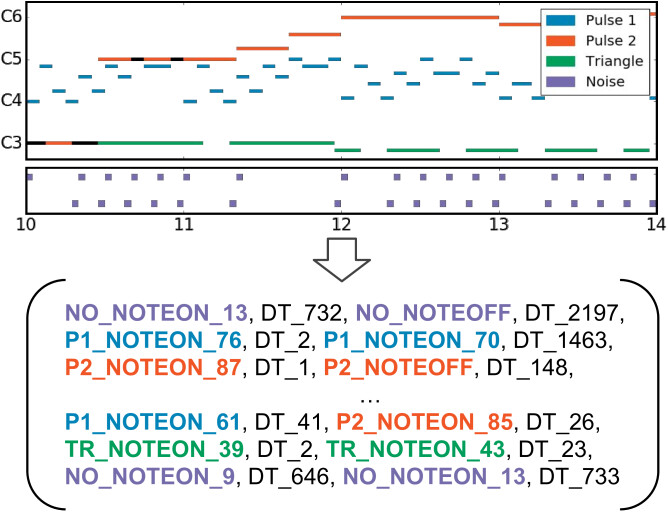

Symphony Generation with Permutation Invariant Language Model

Liu, J., Dong, Y., Cheng, Z., Zhang, X., Li, X., Yu, F., & Sun, M. (2022). Symphony Generation with Permutation Invariant Language Model. arXiv preprint arXiv:2205.05448.

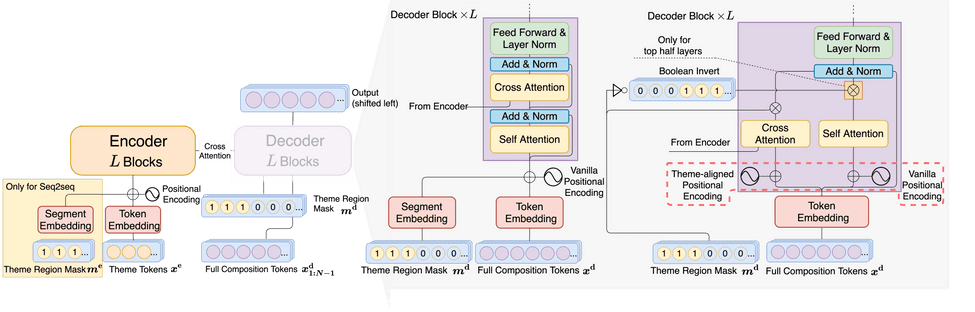

Theme Transfomer

Shih, Y. J., Wu, S. L., Zalkow, F., Muller, M., & Yang, Y. H. (2022). Theme Transformer: Symbolic Music Generation with Theme-Conditioned Transformer. IEEE Transactions on Multimedia.

2021

Compound Word Transformer

Hsiao, W. Y., Liu, J. Y., Yeh, Y. C., & Yang, Y. H. (2021, May). Compound word transformer: Learning to compose full-song music over dynamic directed hypergraphs. In Proceedings of the AAAI Conference on Artificial Intelligence (Vol. 35, No. 1, pp. 178-186).

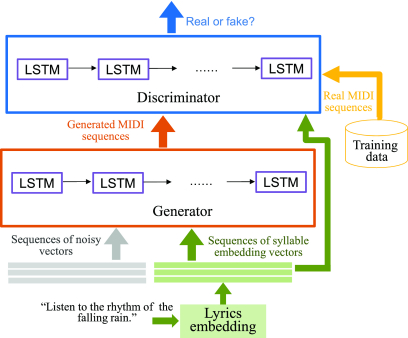

Melody Generation from Lyrics

Yu, Y., Srivastava, A., & Canales, S. (2021). Conditional lstm-gan for melody generation from lyrics. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM), 17(1), 1-20.

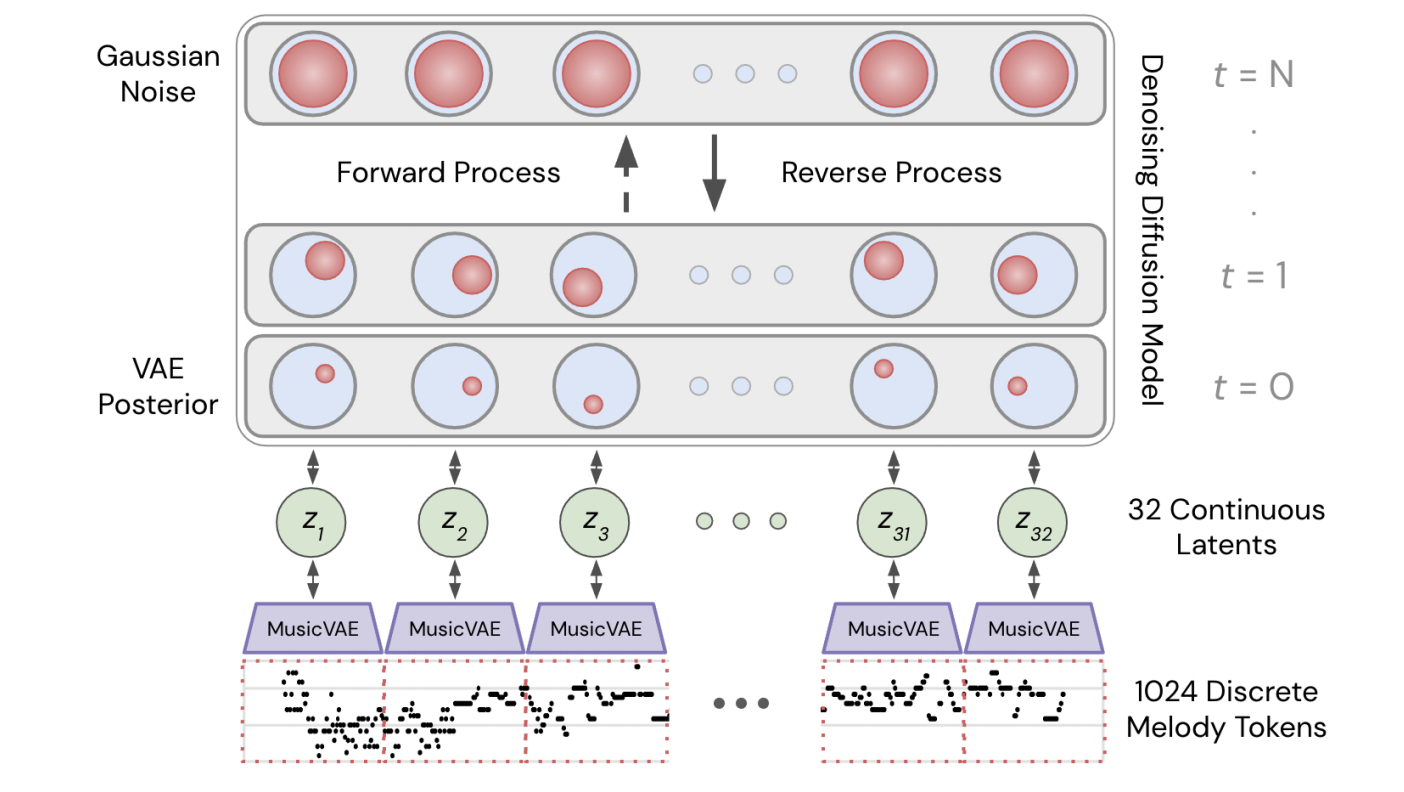

Music Generation with Diffusion Models

Mittal, G., Engel, J., Hawthorne, C., & Simon, I. (2021). Symbolic music generation with diffusion models. arXiv preprint arXiv:2103.16091.

2020

Pop Musc Transfomer

Huang, Y. S., & Yang, Y. H. (2020, October). Pop music transformer: Beat-based modeling and generation of expressive pop piano compositions. In Proceedings of the 28th ACM International Conference on Multimedia (pp. 1180-1188).

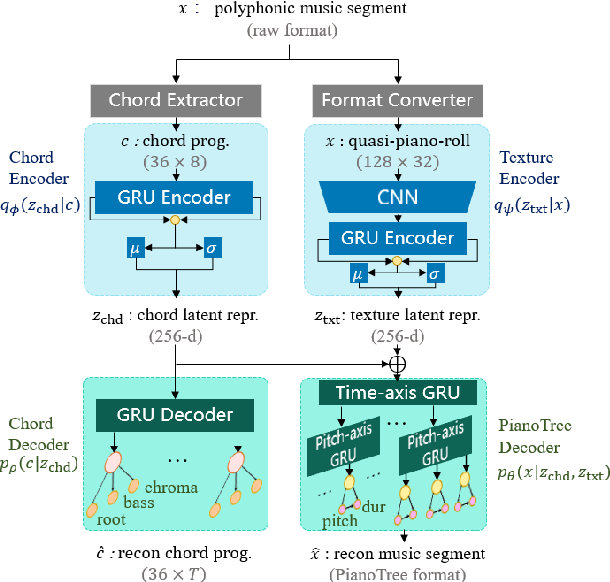

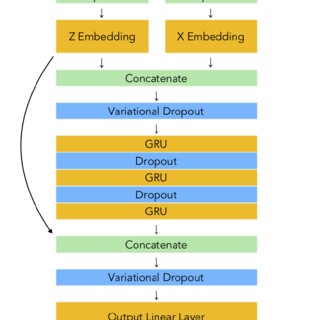

Controllable Polyphonic Music Generation

Wang, Z., Wang, D., Zhang, Y., & Xia, G. (2020). Learning interpretable representation for controllable polyphonic music generation. arXiv preprint arXiv:2008.07122.

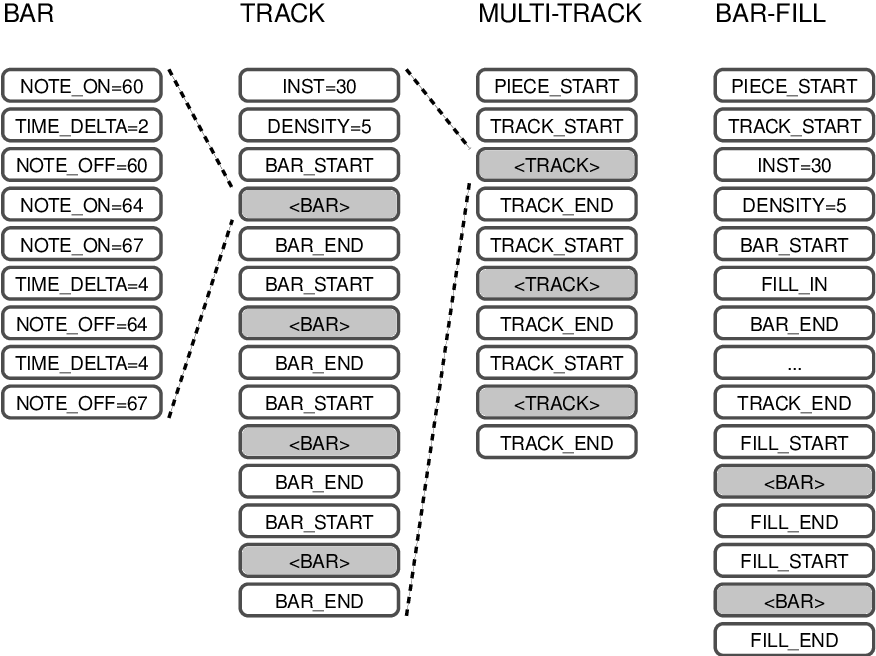

MMM: Multitrack Music Generation

Ens, J., & Pasquier, P. (2020). Mmm: Exploring conditional multi-track music generation with the transformer. arXiv preprint arXiv:2008.06048.

Paper Web Colab Github (AI Guru)

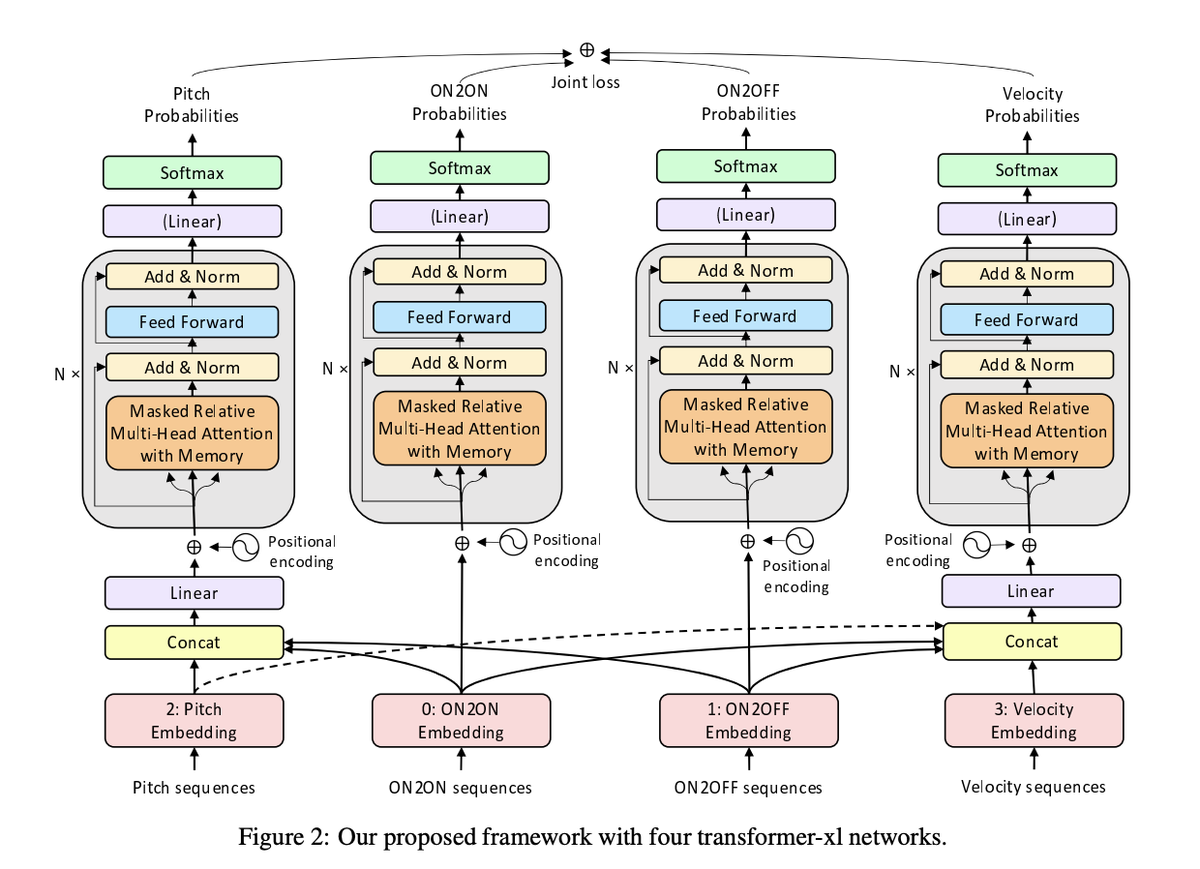

Transformer-XL

Wu, X., Wang, C., & Lei, Q. (2020). Transformer-XL Based Music Generation with Multiple Sequences of Time-valued Notes. arXiv preprint arXiv:2007.07244.

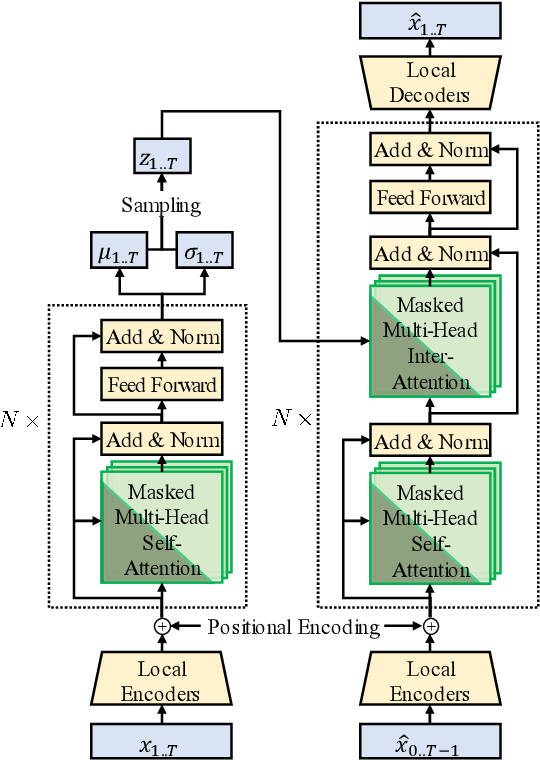

Transformer VAE

Jiang, J., Xia, G. G., Carlton, D. B., Anderson, C. N., & Miyakawa, R. H. (2020, May). Transformer vae: A hierarchical model for structure-aware and interpretable music representation learning. In ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 516-520). IEEE.

2019

TonicNet

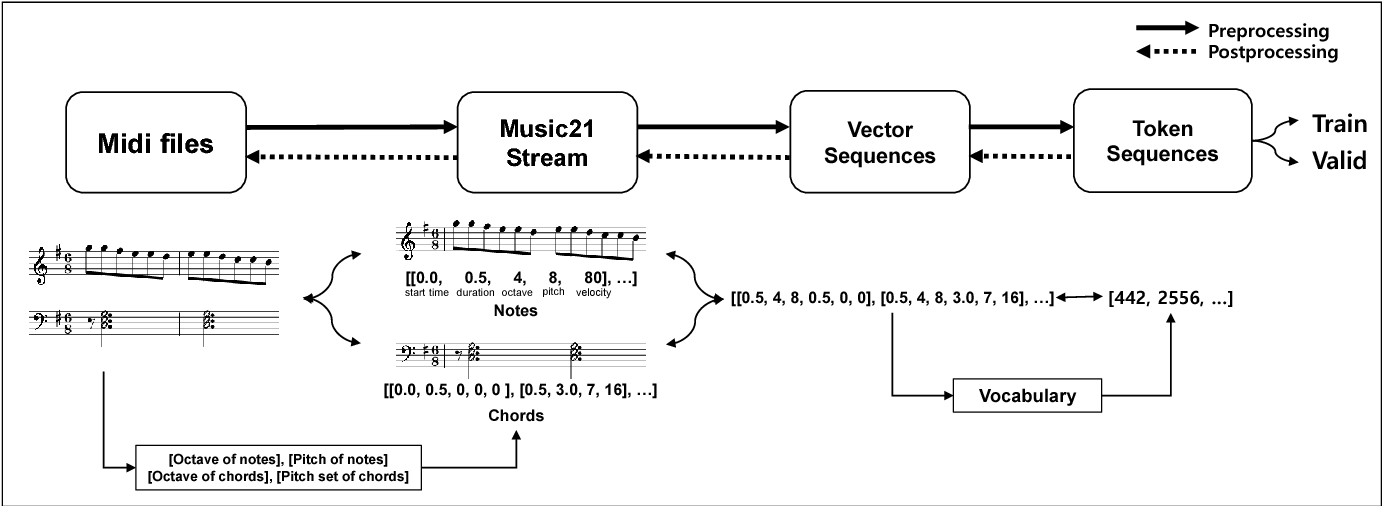

Peracha, O. (2019). Improving polyphonic music models with feature-rich encoding. arXiv preprint arXiv:1911.11775.

LakhNES

Donahue, C., Mao, H. H., Li, Y. E., Cottrell, G. W., & McAuley, J. (2019). LakhNES: Improving multi-instrumental music generation with cross-domain pre-training. arXiv preprint arXiv:1907.04868.

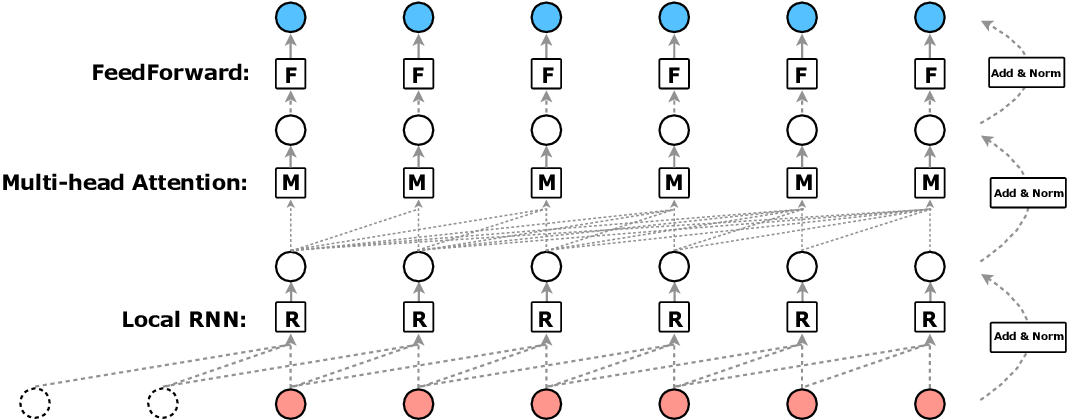

R-Transformer

Wang, Z., Ma, Y., Liu, Z., & Tang, J. (2019). R-transformer: Recurrent neural network enhanced transformer. arXiv preprint arXiv:1907.05572.

Maia Music Generator

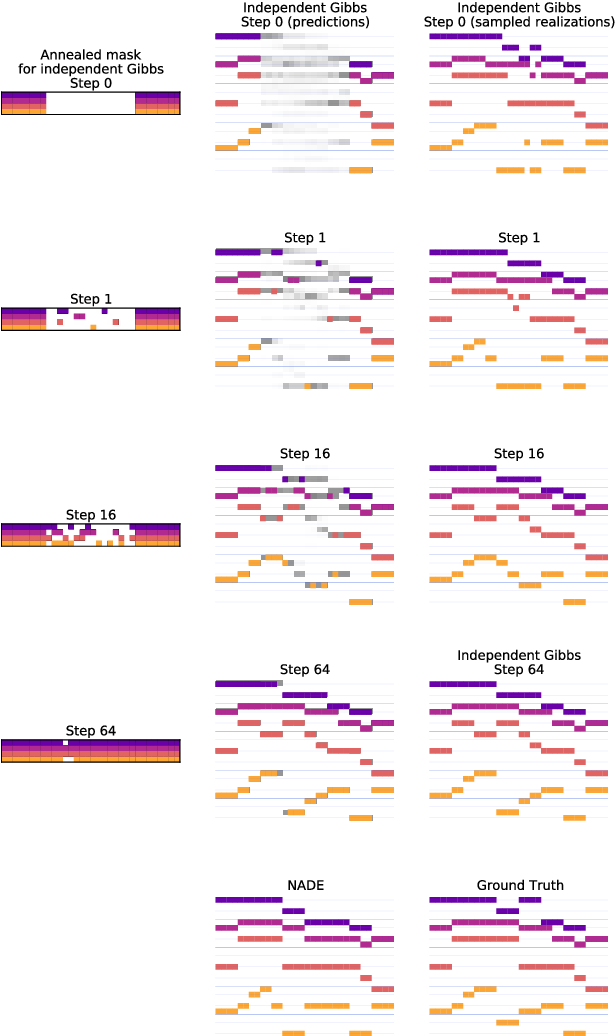

Coconet: Counterpoint by Convolution

Huang, C. Z. A., Cooijmans, T., Roberts, A., Courville, A., & Eck, D. (2019). Counterpoint by convolution. arXiv preprint arXiv:1903.07227.

2018

Music Transformer - Google Magenta

Huang, C. Z. A., Vaswani, A., Uszkoreit, J., Shazeer, N., Simon, I., Hawthorne, et al. (2018). Music transformer. arXiv preprint arXiv:1809.04281.

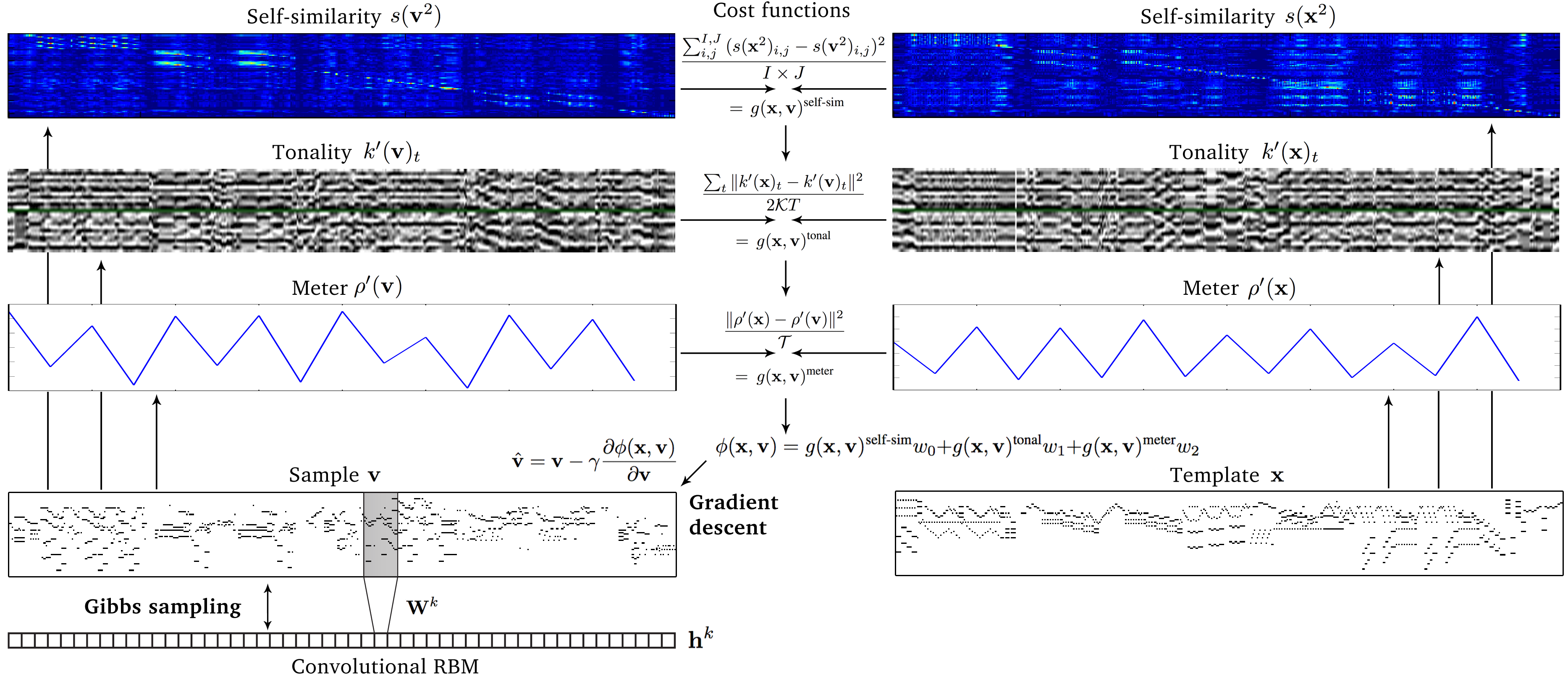

Imposing Higher-level Structure in Polyphonic Music

Lattner, S., Grachten, M., & Widmer, G. (2018). Imposing higher-level structure in polyphonic music generation using convolutional restricted boltzmann machines and constraints. Journal of Creative Music Systems, 2, 1-31.

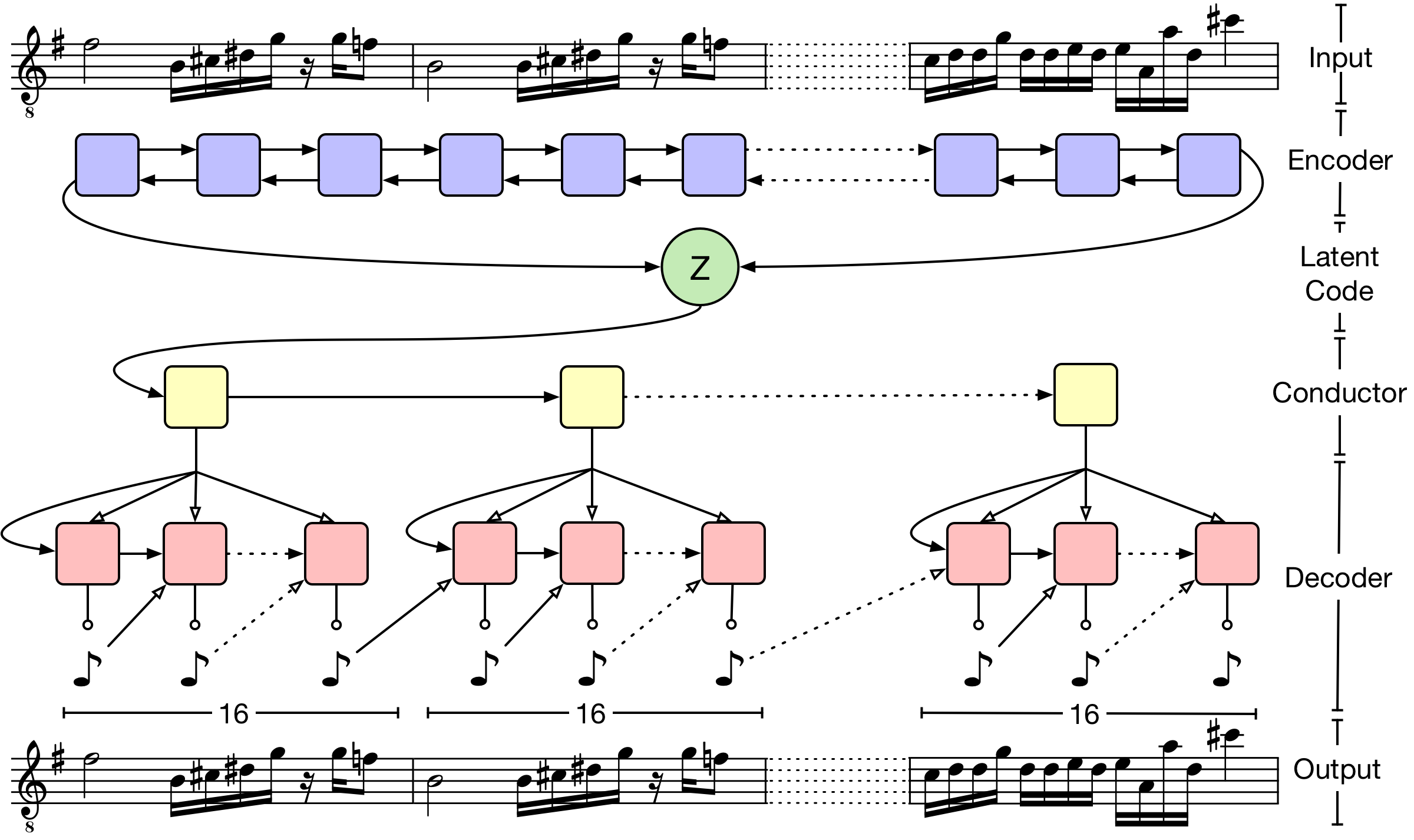

MusicVAE - Google Magenta

Roberts, A., Engel, J., Raffel, C., Hawthorne, C., & Eck, D. (2018, July). A hierarchical latent vector model for learning long-term structure in music. In International Conference on Machine Learning (pp. 4364-4373). PMLR.

Web Paper Code Google Colab Explanation

2017

MorpheuS

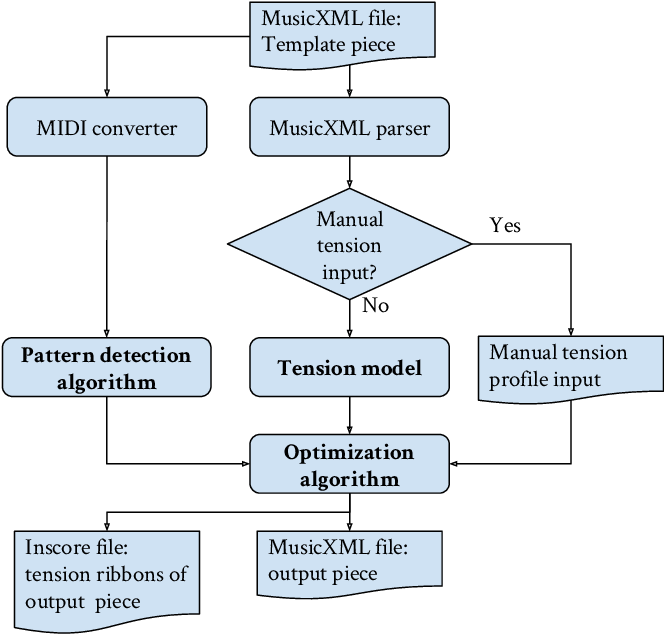

Herremans, D., & Chew, E. (2017). MorpheuS: generating structured music with constrained patterns and tension. IEEE Transactions on Affective Computing, 10(4), 510-523.

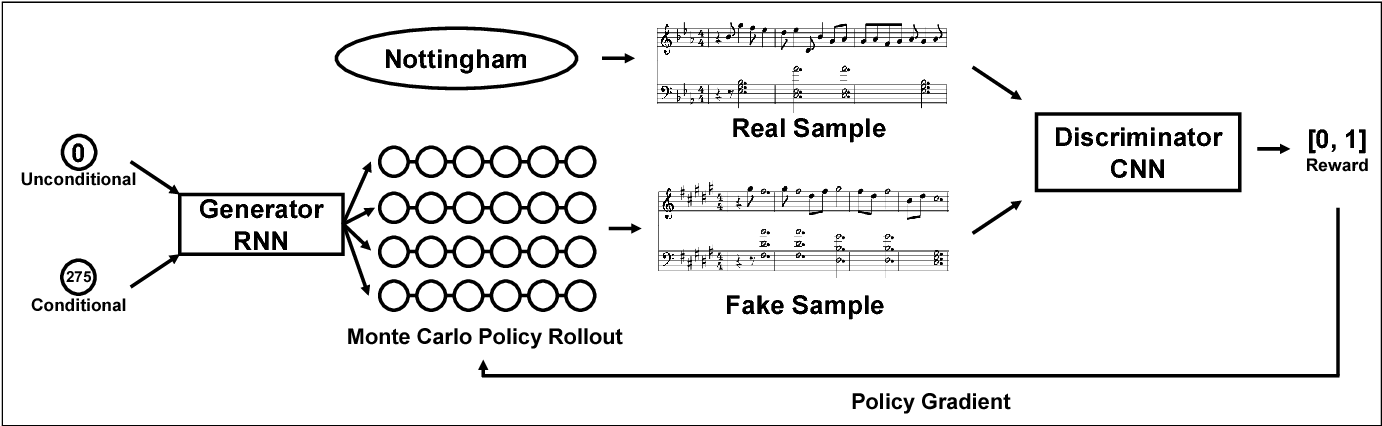

Polyphonic GAN

Lee, S. G., Hwang, U., Min, S., & Yoon, S. (2017). Polyphonic music generation with sequence generative adversarial networks. arXiv preprint arXiv:1710.11418.

BachBot - Microsoft

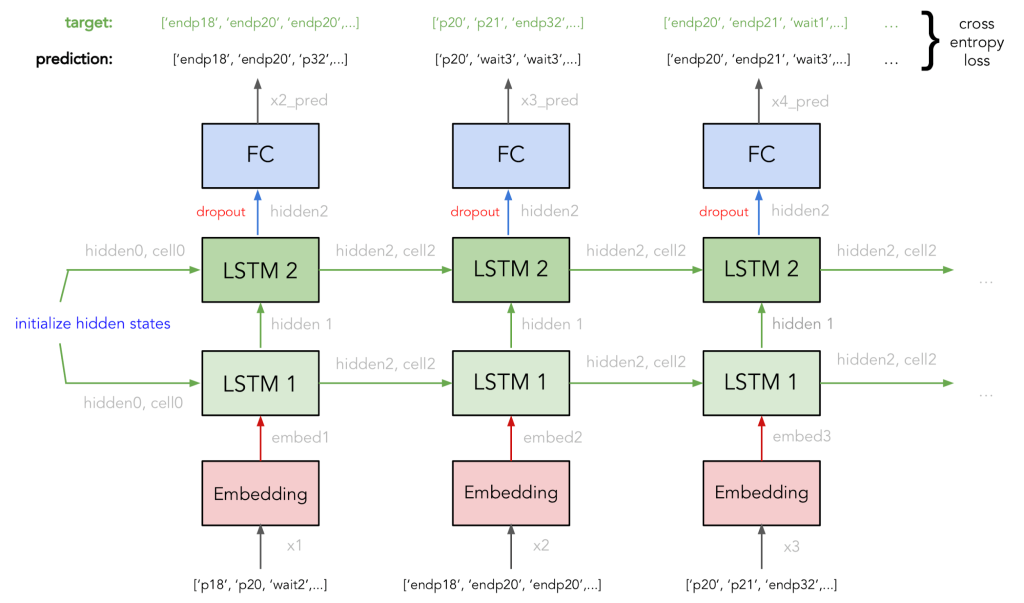

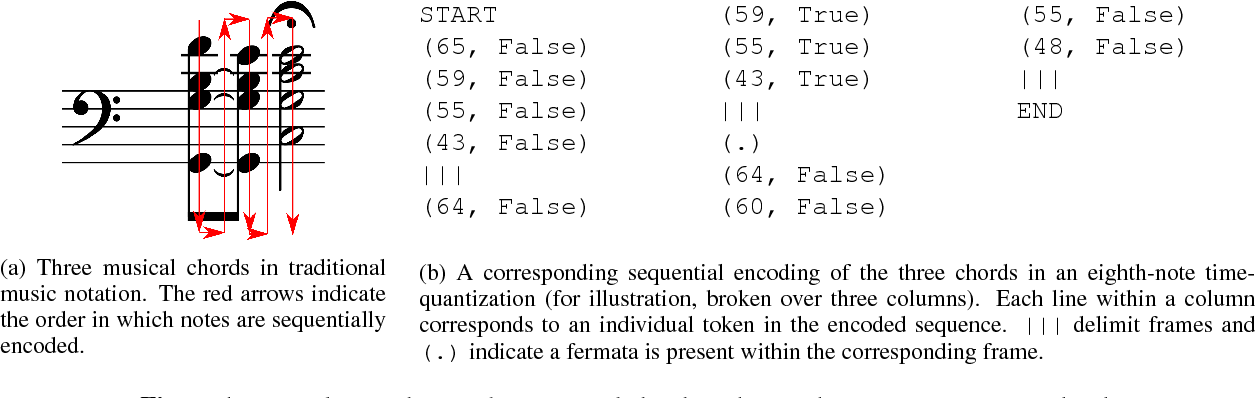

Liang, F. T., Gotham, M., Johnson, M., & Shotton, J. (2017, October). Automatic Stylistic Composition of Bach Chorales with Deep LSTM. In ISMIR (pp. 449-456).

Paper Liang Master Thesis 2016

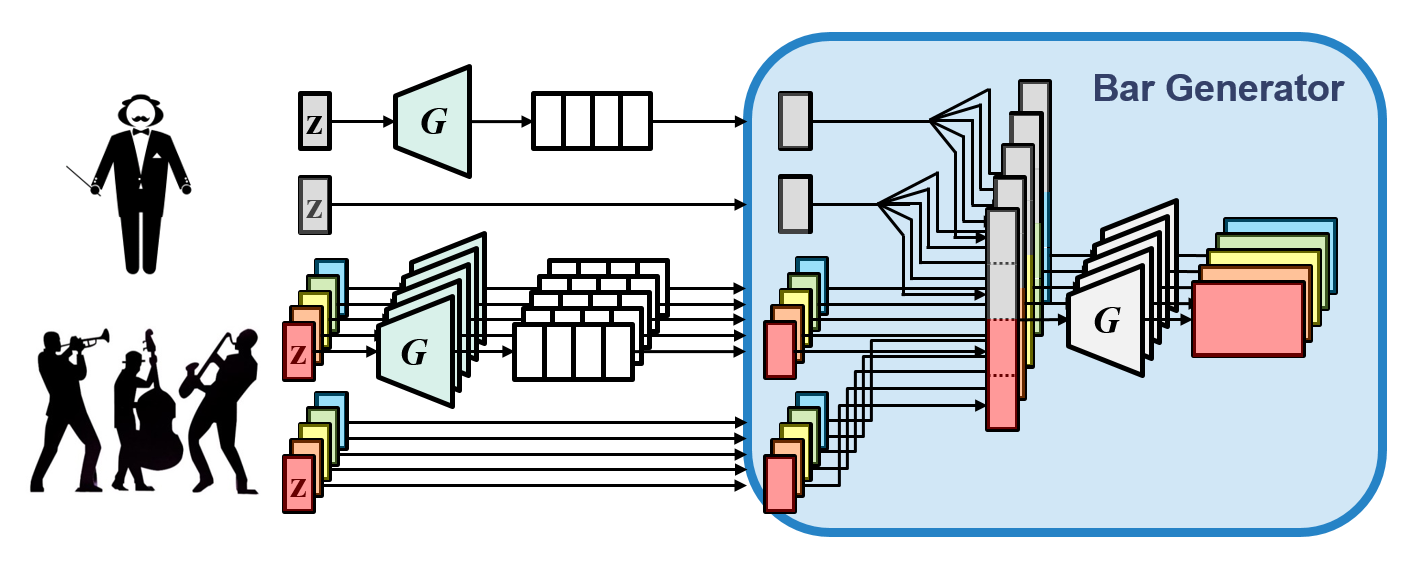

MuseGAN

Dong, H. W., Hsiao, W. Y., Yang, L. C., & Yang, Y. H. (2018, April). Musegan: Multi-track sequential generative adversarial networks for symbolic music generation and accompaniment. In Proceedings of the AAAI Conference on Artificial Intelligence (Vol. 32, No. 1).

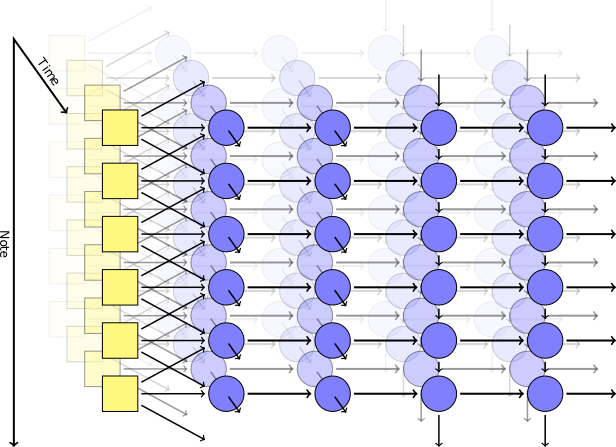

Composing Music with LSTM

Johnson, D. D. (2017, April). Generating polyphonic music using tied parallel networks. In International conference on evolutionary and biologically inspired music and art (pp. 128-143). Springer, Cham.

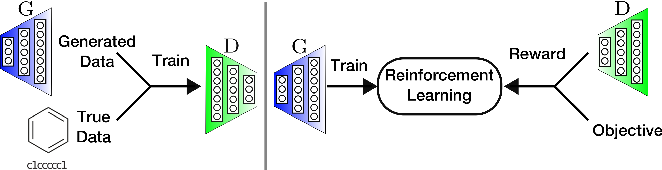

ORGAN

Guimaraes, G. L., Sanchez-Lengeling, B., Outeiral, C., Farias, P. L. C., & Aspuru-Guzik, A. (2017). Objective-reinforced generative adversarial networks (ORGAN) for sequence generation models. arXiv preprint arXiv:1705.10843.

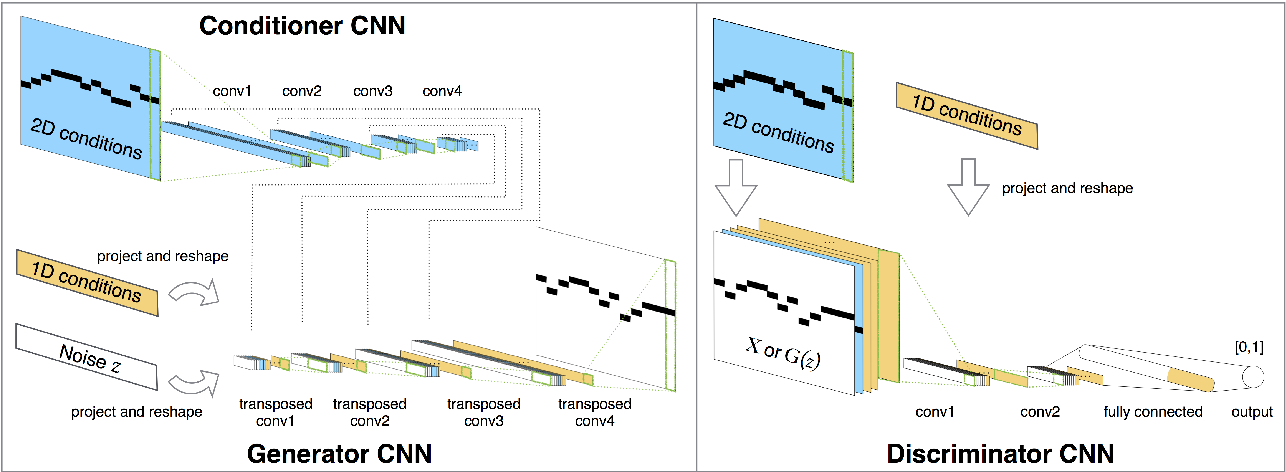

MidiNet

Yang, L. C., Chou, S. Y., & Yang, Y. H. (2017). MidiNet: A convolutional generative adversarial network for symbolic-domain music generation. arXiv preprint arXiv:1703.10847.

2016

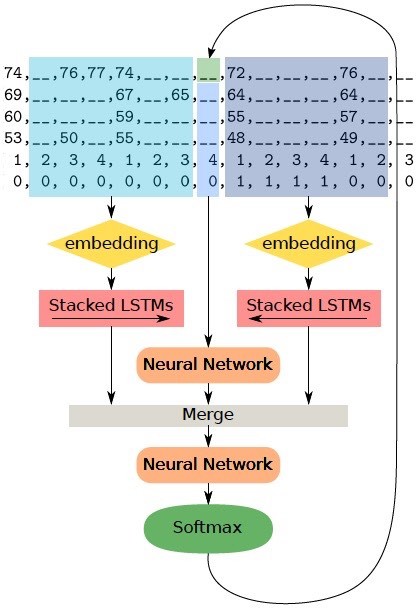

DeepBach

Hadjeres, G., Pachet, F., & Nielsen, F. (2017, July). Deepbach: a steerable model for bach chorales generation. In International Conference on Machine Learning (pp. 1362-1371). PMLR.

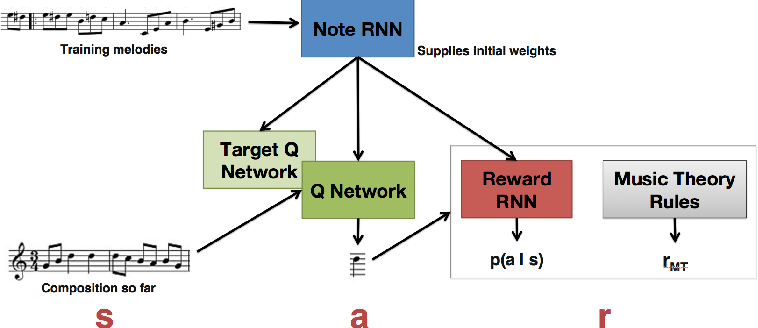

Fine-Tuning with RL

Jaques, N., Gu, S., Turner, R. E., & Eck, D. (2016). Generating music by fine-tuning recurrent neural networks with reinforcement learning.

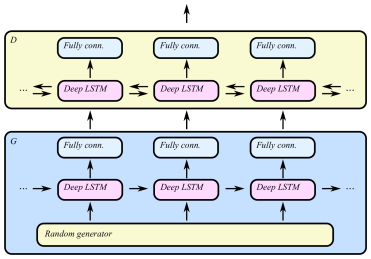

C-RNN-GAN

Mogren, O. (2016). C-RNN-GAN: Continuous recurrent neural networks with adversarial training. arXiv preprint arXiv:1611.09904.

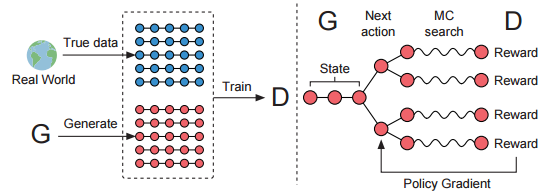

SeqGAN

Yu, L., Zhang, W., Wang, J., & Yu, Y. (2017, February). Seqgan: Sequence generative adversarial nets with policy gradient. In Proceedings of the AAAI conference on artificial intelligence (Vol. 31, No. 1).

2002

Temporal Structure in Music

Eck, D., & Schmidhuber, J. (2002, September). Finding temporal structure in music: Blues improvisation with LSTM recurrent networks. In Proceedings of the 12th IEEE workshop on neural networks for signal processing (pp. 747-756). IEEE.

1980s - 1990s

Mozer, M. C. (1994). Neural network music composition by prediction: Exploring the benefits of psychoacoustic constraints and multi-scale processing. Connection Science, 6(2-3), 247-280.

Books and Reviews

Books

- Briot, J. P., Hadjeres, G., & Pachet, F. (2020). Deep learning techniques for music generation (pp. 1-249). Springer.

Reviews

-

Hernandez-Olivan, C., & Beltran, J. R. (2021). Music composition with deep learning: A review. arXiv preprint arXiv:2108.12290. Paper

-

Ji, S., Luo, J., & Yang, X. (2020). A Comprehensive Survey on Deep Music Generation: Multi-level Representations, Algorithms, Evaluations, and Future Directions. arXiv preprint arXiv:2011.06801. Paper

-

Briot, J. P., Hadjeres, G., & Pachet, F. D. (2017). Deep learning techniques for music generation–a survey. arXiv preprint arXiv:1709.01620. Paper

4. Audio Generation

2023

Vall-E X

Zhang, Z., Zhou, L., Wang, C., Chen, S., Wu, Y., Liu, S., … & Wei, F. (2023). Speak Foreign Languages with Your Own Voice: Cross-Lingual Neural Codec Language Modeling. arXiv preprint arXiv:2303.03926.

ERNIE Music

Zhu, P., Pang, C., Wang, S., Chai, Y., Sun, Y., Tian, H., & Wu, H. (2023). ERNIE-Music: Text-to-Waveform Music Generation with Diffusion Models. arXiv preprint arXiv:2302.04456.

Multi-Source Diffusion Models

Mariani, G., Tallini, I., Postolache, E., Mancusi, M., Cosmo, L., & Rodolà, E. (2023). Multi-Source Diffusion Models for Simultaneous Music Generation and Separation. arXiv preprint arXiv:2302.02257.

SingSong

Donahue, C., Caillon, A., Roberts, A., Manilow, E., Esling, P., Agostinelli, A., … & Engel, J. (2023). SingSong: Generating musical accompaniments from singing. arXiv preprint arXiv:2301.12662.

AudioLDM

Liu, H., Chen, Z., Yuan, Y., Mei, X., Liu, X., Mandic, D., … & Plumbley, M. D. (2023). AudioLDM: Text-to-Audio Generation with Latent Diffusion Models. arXiv preprint arXiv:2301.12503.

Paper Samples [GitHub] (https://github.com/haoheliu/AudioLDM)

Mousai

Schneider, F., Jin, Z., & Schölkopf, B. (2023). Mo\^ usai: Text-to-Music Generation with Long-Context Latent Diffusion. arXiv preprint arXiv:2301.11757.

Make-An-Audio

Huang, R., Huang, J., Yang, D., Ren, Y., Liu, L., Li, M., … & Zhao, Z. (2023). Make-An-Audio: Text-To-Audio Generation with Prompt-Enhanced Diffusion Models. arXiv preprint arXiv:2301.12661.

Noise2Music

Huang, Q., Park, D. S., Wang, T., Denk, T. I., Ly, A., Chen, N., … & Han, W. (2023). Noise2Music: Text-conditioned Music Generation with Diffusion Models. arXiv preprint arXiv:2302.03917.

Msanii

Maina, K. (2023). Msanii: High Fidelity Music Synthesis on a Shoestring Budget. arXiv preprint arXiv:2301.06468.

MusicLM

Agostinelli, A., Denk, T. I., Borsos, Z., Engel, J., Verzetti, M., Caillon, A., … & Frank, C. (2023). Musiclm: Generating music from text. arXiv preprint arXiv:2301.11325.

2022

Musika

Pasini, M., & Schlüter, J. (2022). Musika! Fast Infinite Waveform Music Generation. arXiv preprint arXiv:2208.08706.

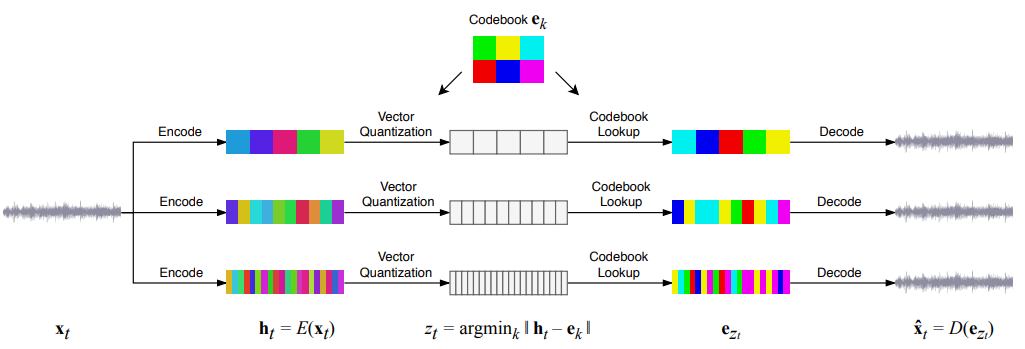

AudioLM

Borsos, Z., Marinier, R., Vincent, D., Kharitonov, E., Pietquin, O., Sharifi, M., … & Zeghidour, N. (2022). Audiolm: a language modeling approach to audio generation. arXiv preprint arXiv:2209.03143.

2021

RAVE

Caillon, A., & Esling, P. (2021). RAVE: A variational autoencoder for fast and high-quality neural audio synthesis. arXiv preprint arXiv:2111.05011.

2020

Jukebox - OpenAI

2017

MuseNet - OpenAI

5. Datasets

-

JSB Chorales Dataset Web

-

Maestro Dataset Web

-

The Lakh MIDI Dataset v0.1 Web Tutorial IPython

6. Journals and Conferences

-

International Society for Music Information Retrieval (ISMIR) Web

-

IEEE Signal Processing (ICASSP) Web

-

ELSEVIER Signal Processing Journal Web

-

Association for the Advancement of Artificial Intelligence (AAAI) Web

-

Journal of Artificial Intelligence Research (JAIR) Web

-

International Joint Conferences on Artificial Intelligence (IJCAI) Web

-

International Conference on Learning Representations (ICLR) Web

-

IET Signal Processing Journal Web

-

Journal of New Music Research (JNMR) Web

-

Audio Engineering Society - Conference on Semantic Audio (AES) Web

-

International Conference on Digital Audio Effects (DAFx) Web

-

David Cope Web

-

Colin Raffel Web

-

Jesse Engel Web

-

Douglas Eck Web

-

Anna Huang Web

-

François Pachet Web

-

Jeff Ens Web

-

Philippe Pasquier Web

8. Research Groups and Labs

-

Google Magenta Web

-

Audiolabs Erlangen Web

-

Music Informatics Group Web

-

Music and Artificial Intelligence Lab Web

-

Metacreation Lab Web

9. Apps for Music Generation with AI

-

AIVA (paid) Web

-

Amper Music (paid) Web

-

Ecrett Music (paid) Web

-

Humtap (free, iOS) Web

-

Amadeus Code (free/paid, iOS) Web

-

Computoser (free) Web

-

Brain.fm (paid) Web

10. Other Resources

- Bustena (web in spanish to learn harmony theory) Web